This project will explore the security and privacy challenges inherent in the tokenization of real-world assets (RWAs) using the blockchain technology. As industries increasingly adopt tokenization to digitize and trade assets like real estate, commodities, and fine art, ensuring the security and privacy of these transactions becomes critical. The research will focus on identifying vulnerabilities in existing tokenization frameworks, analyzing potential risks, and developing novel security protocols to protect sensitive data and ensure the integrity of tokenized assets.

Research projects in Information Technology

Displaying 11 - 20 of 35 projects.

Understanding and detecting mis/disinformation

Mis/disinformation (also known as fake news), in the era of digital communication, poses a significant challenge to society, affecting public opinion, decision-making processes, and even democratic systems. We still know little about the features of this communication, the manipulation techniques employed, and the types of people who are more susceptible to believing this information.

This project extends upon Prof Whitty's work in this field to address one of the issues above.

Human Factors in Cyber Security: Understanding Cyberscams

Online fraud, also referred to as cyberscams, is increasingly becoming a cybersecurity problem that technical cybersecurity specialists are unable to effectively detect. Given the difficulty in the automatic detection of scams, the onus is often pushed back to humans to detect. Gamification and awareness campaigns are regularly researched and implemented in workplaces to prevent people from being tricked by scams, which may lead to identity theft or conning individuals out of money.

Privacy-preserving machine unlearning

Design efficient privacy-preserving method for different machine learning tasks, including training, inference and unlearning

Enhancing Privacy Preservation in Machine Learning

This research project aims to address the critical need for privacy-enhancing techniques in machine learning (ML) applications, particularly in scenarios involving sensitive or confidential data. With the widespread adoption of ML algorithms for data analysis and decision-making, preserving the privacy of individuals' data has become a paramount concern.

Securing Generative AI for Digital Trust

LLM models for learning and retrieving software knowledge

The primary objective of this project is to enhance Large Language Models (LLMs) by incorporating software knowledge documentation. Our approach involves utilizing existing LLMs and refining them using data extracted from software repositories. This fine-tuning process aims to enable the models to provide answers to queries related to software development tasks.

[Malaysia] AI meets Cybersecurity

AI is now trending, and impacting diverse application domains beyond IT, from education (chatGPT) to natural sciences (protein analysis) to social media.

This PhD research focuses on the fusing AI research and cybersecurity research, notably one current direction is on advancing the latest generative AI models for cybersecurity, or vice versa: using cybersecurity to attack AI.

The student is free to discuss with the supervisor on which topic is of the most interest to him/her, in order to shape the PhD topic.

Privacy-Enhancing Technologies for the Social Good

Privacy-Enhancing Technologies (PETs) are a set of cryptographic tools that allow information processing in a privacy-respecting manner. As an example, imagine we have a user, say Alice, who wants to get a service from a service provider, say SerPro. To provide the service, SerPro requests Alice's private information such as a copy of her passport to validate her identity. In a traditional setting, Alice has no choice but to give away her highly sensitive information.

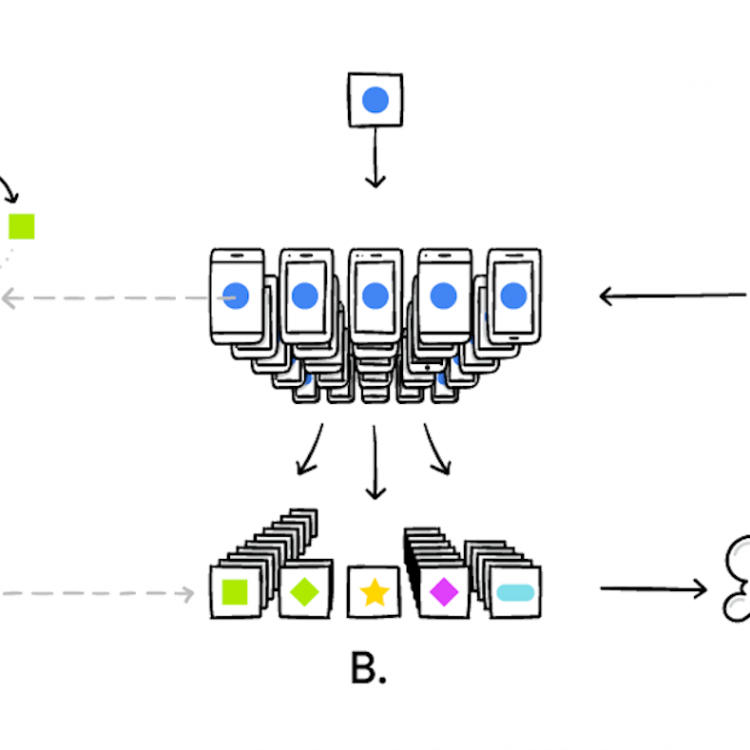

[NextGen] Secure and Privacy-Enhancing Federated Learning: Algorithms, Framework, and Applications to NLP and Medical AI

Federated learning (FL) is an emerging machine learning paradium to enable distributed clients (e.g., mobile devices) to jointly train a machine learning model without pooling their raw data into a centralised server. Because data never leaves from user clients, FL systematically mitigates privacy risks from centralised machine learning and naturally comply with rigorous data privacy regulations, such as GDPR and Privacy Act 1988.