Development of a GIS-Based Model for Active Citizenry

Research projects in Information Technology

Displaying 1 - 10 of 191 projects.

Street-Level Environment Recognition On Moving Resource-Constrained Devices

Street-Level Environment Recognition On Moving Resource-Constrained Devices

Bayesian Generative AI (PhD Project)

For better or for worse, Generative AI is changing our world.

Explainability and Compact representation of K-MDPs

Markov Decision Processes (MDPs) are frameworks used to model decision-making in situations where outcomes are partly random and partly under the control of a decision maker. While small MDPs are inherently interpretable for people, MDPs with thousands of states are difficult to understand by humans. The K-MDP problem is the problem of finding the best MDP with, at most, K states by leveraging state abstraction approaches to aggregate states into sub-groups.

Creating a 21st Century Helpline for Enhanced Support and Continuity of Care

Turning Point is a renowned addiction treatment and research centre specialising in the prevention, treatment, and support services for individuals affected by substance use disorders, gambling addiction, and mental health issues. Turning Point operates a network of 26 helplines across the country, ensuring accessible and immediate support for individuals in need. These helplines serve as a vital resource for individuals seeking assistance, information, and guidance related to addiction and mental health concerns.

Formally Verified Automated Reasoning in Non-Classical Logics

Classical propositional logic (CPL) captures our basic understanding of the linguistic connectives “and”, “or” and “not”. It also provides a very good basis for digital circuits. But it does not account for more sophisticated linguistic notions such as “always”, “possibly”, “believed” or “knows”. Philosophers therefore invented many different non-classical logics which extend CPL with further operators for these notions.

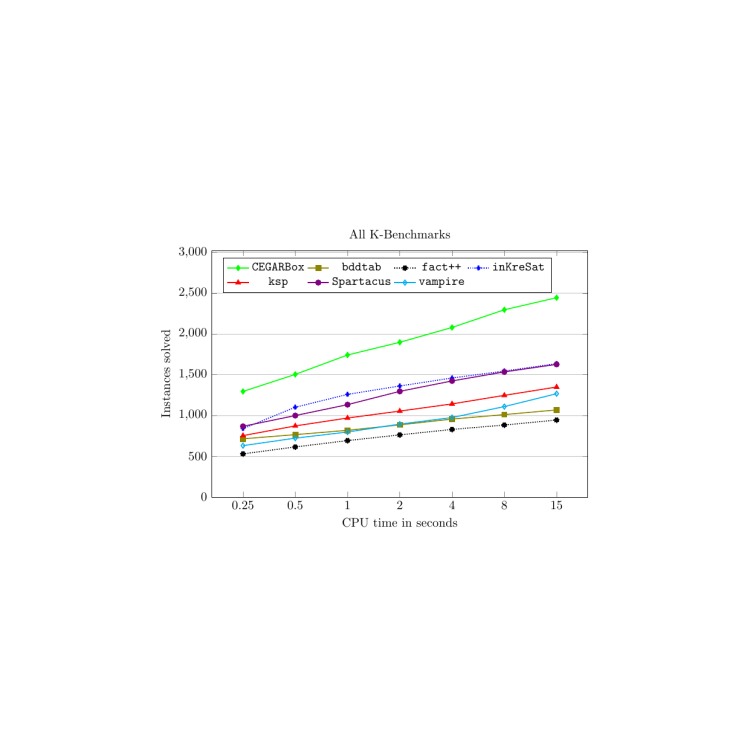

Efficient CEGAR-tableaux for Non-classical Logics

Classical propositional logic (CPL) captures our basic understanding of the linguistic connectives “and”, “or” and “not”. It also provides a very good basis for digital circuits. But it does not account for more sophisticated linguistic notions such as “always”, “possibly”, “believed” or “knows”. Philosophers therefore invented many different non-classical logics which extend CPL with further operators for these notions.

Measuring The Birrarung: Data Fusion and Optimisation

This project will result in a much fuller understanding of the state of the Birrarung than is currently possible, as well as qualitative and quantitative results to model different interventions and their effect on swimmability.

The project will build tools and techniques to understand and decide on effective interventions to improve the Birrarung’s swimmability.

Blackbox Multi-Objective Optimization of Unknown Functions

In many branches of science (e.g., Artificial Intelligence, Engineering etc.), the modelling of the problem is done through the use of functions (e.g., f(x) = y). On a very high-level, we can think of Machine Learning as the problem of approximating function f from the pair of measurements (x,y), and Optimization as the problem of finding the value of input x that maximizes the output y given function f.

NeuroDistSys (NDS): Optimized Distributed Training and Inference on Large-Scale Distributed Systems

In NeuroDistSys (NDS): Optimized Distributed Training and Inference on Large-Scale Distributed Systems, we aim to design and implement cutting-edge techniques to optimize the training and inference of Machine Learning (ML) models across large-scale distributed systems. Leveraging advanced AI and distributed computing strategies, this project focuses on deploying ML models on real-world distributed infrastructures, improving system performance, scalability, and efficiency by optimizing resource usage (e.g., GPUs, CPUs, energy consumption).